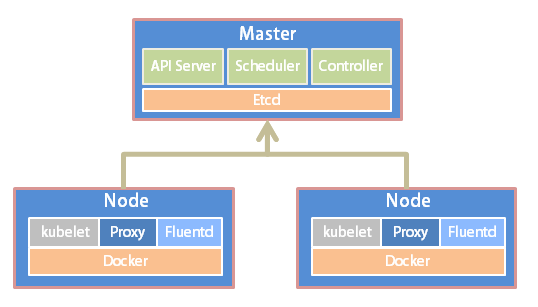

Kubernetes简介 Kubernetes(简称K8S)是开源的容器集群管理系统,可以实现容器集群的自动化部署、自动扩缩容、维护等功能。它既是一款容器编排工具,也是全新的基于容器技术的分布式架构领先方案。在Docker技术的基础上,为容器化的应用提供部署运行、资源调度、服务发现和动态伸缩等功能,提高了大规模容器集群管理的便捷性。

K8S集群中有管理节点与工作节点两种类型。管理节点主要负责K8S集群管理,集群中各节点间的信息交互、任务调度,还负责容器、Pod、NameSpaces、PV等生命周期的管理。工作节点主要为容器和Pod提供计算资源,Pod及容器全部运行在工作节点上,工作节点通过kubelet服务与管理节点通信以管理容器的生命周期,并与集群其他节点进行通信。

一、 环境准备 Kubernetes支持在物理服务器或虚拟机中运行,本次使用虚拟机准备测试环境,硬件配置信息如表所示:

IP地址

节点角色

CPU

Memory

Hostname

磁盘

10.0.201.1

master

>=2c

>=2G

k8s-01

sda、sdb

10.0.201.2

worker

>=2c

>=2G

k8s-02

sda、sdb

10.0.201.3

worker

>=2c

>=2G

k8s-03

sda、sdb

注:在所有节点上进行如下操作

1.编辑 /etc/hosts 文件,添加域名解析。

cat <`<EOF >`>/etc/hosts

2.关闭防火墙、selinux和swap。

1 2 3 4 5 6 systemctl stop firewalld

3.配置国内yum源

1 2 3 4 5 yum install -y wget

二、 前置检查 1、允许 iptables 检查桥接流量

确保 br_netfilter 模块被加载。运行 lsmod | grep br_netfilter 来检查。加载该模块,执行 sudo modprobe br_netfilter。

2、为了让你的 Linux 节点上的 iptables 能够正确地查看桥接流量,你需要确保在你的 sysctl 配置中将 net.bridge.bridge-nf-call-iptables 设置为 1。例如:

1 2 3 cat <<EOF | sudo tee /etc/modules-load.d/k8s.conf

1 2 3 4 5 cat <<EOF | sudo tee /etc/sysctl.d/k8s.conf

三、 软件安装 注:在所有节点上进行如下操作

1.安装docker

1 2 3 4 5 sudo yum install -y yum-utils

修改docker的cgroup driver为systemd

1 2 3 4 5 6 7 8 cat <<EOF > /etc/docker/daemon.json { "exec-opts": ["native.cgroupdriver=systemd"] } EOF enable docker.service

docker服务为容器运行提供计算资源,是所有容器运行的基本平台。

2.安装kubeadm、kubelet、kubectl

配置国内Kubernetes源

1 2 3 4 5 6 7 8 9 cat <`<EOF >` /etc/yum.repos.d/kubernetes.repo

1 2 yum install -y kubelet kubeadm kubectl --disableexcludes=kubernetes

Kubelet负责与其他节点集群通信,并进行本节点Pod和容器生命周期的管理。Kubeadm 是Kubernetes的自动化部署工具,降低了部署难度,提高效率。Kubectl是Kubernetes集群管理工具。

1 2 systemctl daemon-reload

四、 部署master 节点 注:在master节点上进行如下操作

1.在master进行Kubernetes集群初始化。

使用 flannel

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 kubeadm init --apiserver-advertise-address=10.0.201.1 \

如果使用root用户:

1 export KUBECONFIG=/etc/kubernetes/admin.conf

pod网络组件选择一种

flannel

1 kubectl apply -f https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Calico

1 2 3 4 curl https://docs.projectcalico.org/manifests/calico.yaml -O

cidr: 192.168.0.0/16 替换为 cidr: 10.244.0.0/16

记录生成的最后部分内容,此内容需要在其它节点加入Kubernetes集群时执行。

添加控制节点

1 2 3 kubeadm join k8s.dabing.space:6443 --token 52osia.mqhmkakgvihmykhb \

添加计算节点

1 2 kubeadm join k8s.dabing.space:6443 --token 52osia.mqhmkakgvihmykhb \

token 查询:

token创建:

discovery-token-ca-cert-hash``生成:

1 2 openssl x509 -pubkey -in /etc/kubernetes/pki/ca.crt | openssl rsa -pubin -outform der 2>/dev/null | \'s/^.* //'

2.配置kubectl工具

1 2 3 4 mkdir -p /root/.kube

五、 集群状态检测 注:在master节点上进行如下操作

1.在master节点输入命令检查集群状态,返回如下结果则集群状态正常。

1 2 3 4 5 6 kubectl get nodes

重点查看STATUS内容为Ready时,则说明集群状态正常。

2.创建Pod以验证集群是否正常。

1 2 3 kubectl create deployment nginx --image=nginx

六、 部署Ingress 1 2 helm upgrade --install ingress-nginx library/ingress-nginx \set controller.admissionWebhooks.enabled=false

创建域名secret

1 kubectl create secret tls dabing.space-secret --cert=fullchain.pem --key=privkey.pem -n kubernetes-dashboard

七、 部署Dashboard 注:在master节点上进行如下操作

2.部署Dashboard

1 kubectl apply -f https://raw.githubusercontent.com/kubernetes/dashboard/v2.4.0/aio/deploy/recommended.yaml

运行proxy

[root@k8s-01 ~]# kubectl -n kubernetes-dashboard edit svc kubernetes-dashboard

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 apiVersion: v1 kind: Service metadata: annotations: kubectl.kubernetes.io/last-applied-configuration: | {"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"labels":{"k8s-app":"kubernetes-dashboard"},"name":"kubernetes-dashboard","namespace":"kubernetes-dashboard"},"spec":{"ports":[{"port":443,"targetPort":8443}],"selector":{"k8s-app":"kubernetes-dashboard"}}} creationTimestamp: "2019-11-08T07:56:44Z" labels: k8s-app: kubernetes-dashboard name: kubernetes-dashboard namespace: kubernetes-dashboard resourceVersion: "5786" selfLink: /api/v1/namespaces/kubernetes-dashboard/services/kubernetes-dashboard uid: f289c60d-040f-42cf-b18d-db8c621f0a2a spec: clusterIP: 10.1 .167 .91 ports: - port: 443 protocol: TCP targetPort: 8443 nodePort: 34430 selector: k8s-app: kubernetes-dashboard sessionAffinity: None type: NodePort status: loadBalancer: {}

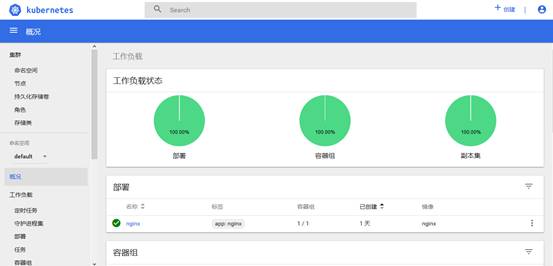

4.在浏览器输入Dashboard访问地址:http://10.0.201.1:34430

5.创建简单用户 kubectl apply -f dashboard-adminuser.yaml

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 apiVersion: v1 kind: ServiceAccount metadata: name: admin-user namespace: kubernetes-dashboard --- apiVersion: rbac.authorization.k8s.io/v1 kind: ClusterRoleBinding metadata: name: admin-user roleRef: apiGroup: rbac.authorization.k8s.io kind: ClusterRole name: cluster-admin subjects: - kind: ServiceAccount name: admin-user namespace: kubernetes-dashboard

查询登录token

1 kubectl -n kubernetes-dashboard describe secret $(kubectl -n kubernetes -dashboard get secret | grep admin -user | awk '{print $1}')

6.使用输出的token登录Dashboard。

认证通过后,登录Dashboard首页如图

暴漏dashboard 服务

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 kind: Ingress apiVersion: networking.k8s.io/v1 metadata: annotations: nginx.ingress.kubernetes.io/backend-protocol: "HTTPS" name: console namespace: kubernetes-dashboard spec: ingressClassName: nginx rules: - host: dashboard.dabing.space http: paths: - path: / pathType: ImplementationSpecific backend: service: name: kubernetes-dashboard port: number: 443 tls: - hosts: - dashboard.dabing.space secretName: dabing.space-secret

八、 root-dir修改 修改root-dir

1 2 3 kubectl drain nodename

修改/usr/lib/systemd/system/kubelet.service.d/10-kubeadm.conf:

增加

Environment=”KUBELET_EXTRA_ARGS=$KUBELET_EXTRA_ARGS –root-dir=/data/k8s/kubelet/“

1 2 3 4 mv /var/lib/kubelet /data/k8s/

九、 证书过期处理 现象:

[root@k8s-01 ~]# kubectl get nodes

Unable to connect to the server: x509: certificate has expired or is not yet valid

1.16版本,k8s内部强制默认采用https通信。部署时候生成的证书默认有效期就是一年

执行下列命令确认是否是证书过期问题

1 2 3 4 5 6 7 8 9 10 11 12 13 [root@k8s-01 ~]# kubeadm alpha certs check-expiration

可以很清楚的看到:证书到29号就到期了。所以问题定位了。剩下的就是更新证书了

去官网查询更新证书方法,官方提供两种更新办法:

\1. upgrade版本

\2. 手工更新证书

更新步骤如下:

a) kubeadm alpha certs renew all

[root@k8s-01 ~]# kubeadm alpha certs renew all

certificate embedded in the kubeconfig file for the admin to use and for kubeadm itself renewed

certificate for serving the Kubernetes API renewed

certificate the apiserver uses to access etcd renewed

certificate for the API server to connect to kubelet renewed

certificate embedded in the kubeconfig file for the controller manager to use renewed

certificate for liveness probes to healthcheck etcd renewed

certificate for etcd nodes to communicate with each other renewed

certificate for serving etcd renewed

certificate for the front proxy client renewed

certificate embedded in the kubeconfig file for the scheduler manager to use renewed

执行完这一步再去看看证书,会发现已经都更新过了

[root@k8s-01 ~]# kubeadm alpha certs check-expiration

CERTIFICATE EXPIRES RESIDUAL TIME EXTERNALLY MANAGED

admin.conf Mar 08, 2022 11:07 UTC 364d no

apiserver Mar 08, 2022 11:07 UTC 364d no

apiserver-etcd-client Mar 08, 2022 11:07 UTC 364d no

apiserver-kubelet-client Mar 08, 2022 11:07 UTC 364d no

controller-manager.conf Mar 08, 2022 11:07 UTC 364d no

etcd-healthcheck-client Mar 08, 2022 11:07 UTC 364d no

etcd-peer Mar 08, 2022 11:07 UTC 364d no

etcd-server Mar 08, 2022 11:07 UTC 364d no

front-proxy-client Mar 08, 2022 11:07 UTC 364d no

scheduler.conf Mar 08, 2022 11:07 UTC 364d no

b) kubeadm alpha kubeconfig user –apiserver-advertise-address 127.0.0.1 –client-name www.baidu.com

这一步是更新配置kubelet配置文件,根据自己环境修改api的IP地址和主机域名

c)更新.kube文件

cp -i /etc/kubernetes/admin.conf ~/.kube/config

十、 删除node kubectl drain k8s-02 –delete-emptydir-data –force –ignore-daemonsets

node节点执行

kubeadm reset

iptables -F && iptables -t nat -F && iptables -t mangle -F && iptables -X

kubectl delete node k8s-02

节点无法排水

error when evicting pods/“calico-typha-695fdb59b9-vppmh” -n “calico-system” (will retry after 5s): Cannot evict pod as it would violate the pod’s disruption budget

是 PDB(pod’s disruption budget)的特性,主动清理 Pod 时(例如 drain)对可用数量的保护,防止影响业务

扩容

kubectl scale –replicas=2 deploy/calico-typha -n calico-system

十一、 NetworkPolicy示例 1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 kubectl create -f - <<EOF apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-dns-access namespace: default spec: podSelector: matchLabels: {}policyTypes: - Egress egress: - to: - namespaceSelector: matchLabels: kubernetes.io/metadata.name: kube-system ports: - protocol: UDP port: 53 EOF kubectl create -f - <<EOF apiVersion: networking.k8s.io/v1 kind: NetworkPolicy metadata: name: allow-all spec: podSelector: {}ingress: - {}egress: - {}policyTypes: - Ingress - Egress EOF

十二、 Kubernetes NFS-Client Provisioner 安装 helm install my-nfs-client-provisioner –set nfs.server=10.0.201.3 –set nfs.path=/storage stable/nfs-client-provisioner

注意 nfs 权限 /storage 10.0.201.0/24(rw,sync,no_root_squash,no_all_squash)

注:所有node

yum -y install nfs-utils rpcbind

错误:

Error: failed to download “incubator/nfs-client-provisioner” (hint: running helm repo update may help)

解决:

helm repo add stable https://kubernetes-charts.storage.googleapis.com/